Index

- 1. Data Gathering

- 2. Data Preprocessing

- 3. Text vectorizing

- 4. K-means optimization

- 5. Some insights about the data

- 6. Applying PCA (Principal Component Analysis)

# data manipulation

import pandas as pd

import numpy as np

import time

import re

from tqdm import tqdm

# text preprocessing

import nltk

from nltk.corpus import stopwords

from nltk.stem import WordNetLemmatizer

nltk.downloader.download('vader_lexicon')

# visualizing

import matplotlib.pyplot as plt

import altair as alt

import seaborn as sns

sns.set_style('darkgrid')

# clusters

from sklearn import metrics

from sklearn.cluster import MiniBatchKMeans, KMeans

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn.datasets import fetch_20newsgroups

# PCA Decomposition

from sklearn.decomposition import PCA

# silhouette method

from sklearn.metrics import silhouette_samples, silhouette_score

# annoying error messages =P

import warnings

warnings.filterwarnings("ignore")

[nltk_data] Downloading package vader_lexicon to

[nltk_data] C:\Users\PICHAU\AppData\Roaming\nltk_data...

[nltk_data] Package vader_lexicon is already up-to-date!

Data Gathering

- In this project i’ll be working with the 20newsgroups dataset.

- 20newsgroups dataset comprises around 18000 posts newsgroups posts on 20 topics.

# https://scikit-learn.org/0.19/datasets/twenty_newsgroups.html

newsgroups_train = fetch_20newsgroups(subset='train')

#show topics

print(list(newsgroups_train.target_names))

['alt.atheism', 'comp.graphics', 'comp.os.ms-windows.misc', 'comp.sys.ibm.pc.hardware', 'comp.sys.mac.hardware', 'comp.windows.x', 'misc.forsale', 'rec.autos', 'rec.motorcycles', 'rec.sport.baseball', 'rec.sport.hockey', 'sci.crypt', 'sci.electronics', 'sci.med', 'sci.space', 'soc.religion.christian', 'talk.politics.guns', 'talk.politics.mideast', 'talk.politics.misc', 'talk.religion.misc']

categories = [

'comp.graphics',

'comp.os.ms-windows.misc',

'rec.sport.baseball',

'talk.politics.guns',

'talk.politics.mideast',

'talk.politics.misc',

'rec.sport.hockey',

'alt.atheism',

'soc.religion.christian',

]

dataset = fetch_20newsgroups(subset='train', categories=categories, shuffle=True, remove=('headers', 'footers', 'quotes'))

df1 = pd.DataFrame(dataset['data'], columns = ['content'])

Data Preprocessing

Lemmatizing and removing stopwords

news = df1.copy()

import string

def text_process(text):

stemmer = WordNetLemmatizer()

# remove punctuation

nopunc = [char for char in text if char not in string.punctuation] # '!"#$%&\'()*+,-./:;<=>?@[\\]^_`{|}~'

# remove digits

nopunc = ''.join([i for i in nopunc if not i.isdigit()])

# split each review into words, lowecase them and remove stopwords

nopunc = [word.lower() for word in nopunc.split() if word not in stopwords.words('english')]

# lemmatize the list of words created

return [stemmer.lemmatize(word) for word in nopunc]

t0 = time.time()

news['filtered_content'] = news['content'].apply(text_process).apply(lambda x : " ".join(x))

print(f'Done! Elapsed time: {round(time.time() - t0, 1)} seconds')

Done! Elapsed time: 277.9 seconds

news.shape

(5026, 2)

news.head()

| content | filtered_content | |

|---|---|---|

| 0 | \n\nI have discussed this with my girlfriend o... | i discussed girlfriend often i consider marrie... |

| 1 | \nYou might want to re-think your attitude abo... | you might want rethink attitude holocaust read... |

| 2 | \nIt's bad jokes like that which draws crohns,... | it bad joke like draw crohn i mean groan crowd... |

| 3 | \n\nIf anyone gets the New York Times, the Edi... | if anyone get new york time edit page transcri... |

| 4 | I apologize for the long delay in getting a re... | i apologize long delay getting response posted... |

Text vectorizing

- Vectorization is nothing but the process of converting a text into numerical representation.

- Indicates the importance of certain word inside of a text corpus.

TFIDF

- TFIDF is a product of how frequent a word is in a document multiplied by how unique a word is.

- TF stands for term frequency.

- Counts how many times certain word appeared inside of a text corpus.

- IDF stands for Inverse Document Frequency.

- IDF measures the relevance of the word in other text corpus.

- In a nutshel, IDF will measure how rare or how common a word will be for the entire dataset.

- Rare words will have higher values than frequent words.

- In a classification model for example, will be interesting to use rare words instead of frequent words.

stop_words = stopwords.words('english')

vectorizer = TfidfVectorizer(

min_df = 5,

max_df = 0.95,

max_features = None,

analyzer = 'word',

stop_words = stop_words)

tfidf_text = vectorizer.fit_transform(news.filtered_content)

print(f'n_samples:{tfidf_text.shape[0]}, num_features: {tfidf_text.shape[1]}')

n_samples:5026, num_features: 8678

max_dfis used for removing terms that appear too frequently, also known as “corpus-specific stop words”.- max_df = 0.50 means “ignore terms that appear in more than 50% of the documents”.

- max_df = 25 means “ignore terms that appear in more than 25 documents”.

min_dfis used for removing terms that appear too infrequently.- min_df = 0.01 means “ignore terms that appear in less than 1% of the documents”.

- min_df = 5 means “ignore terms that appear in less than 5 documents”.

vec = tfidf_text

K-means optimization

- K-means is an unsupervised learning algorithm.

- Uses machine learning to analyze and cluster unlabeled datasets.

- We initially pass a random number of K to see its performance.

- K corresponds to the number of groups.

- Sometimes it is difficult to choose an optimal number of K.

- In order for trying to solve that, we can use some metrics like The elbow method and Silhouette score

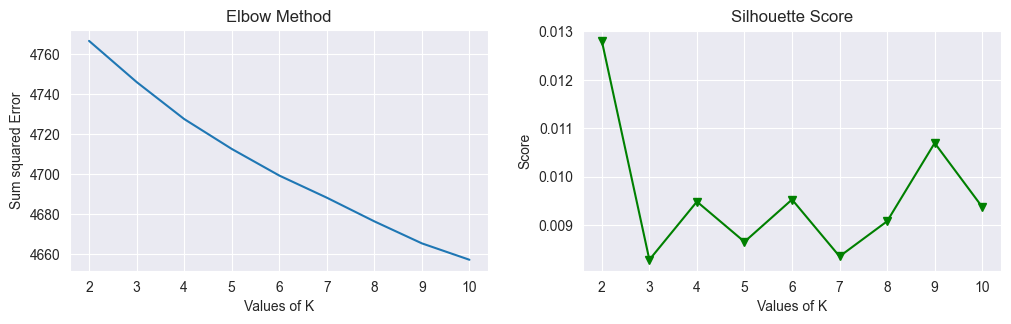

Elbow Method

- Elbow methods measures the euclidean distance from the datapoint to its cluster.

- Then we square and sum them all up.

- As the number of clusters increases, each single datapoint will have a cluster center closer to it. Resulting in a decreasing sum of square erros.

- The main point is to find the "elbow" of the graph, which means by increasing the number of clusters we're getting less and less ideal decrease of the sum of squared error, so the elbow point should be the optimal number for cluster.

Silhouette Method

- Sometimes it's hard to find a good elbow point on previous elbow graph, so we need to use another strategy to find the optimal number, the Silhouette method.

- Silhouette method measures the distance between each cluster minus the distance within each cluster.

- The higher the value of the silhouette score the better it divides the dataset into differente clusters.

def optimise_kmeans(data, max_k):

means = []

inertias = []

silhouette_avg = []

for k in tqdm(range(2, max_k + 1)):

kmeans = KMeans( n_clusters = k)

kmeans.fit(data)

means.append(k)

inertias.append(kmeans.inertia_)

# silhouette

cluster_labels = kmeans.labels_

silhouette_avg.append(silhouette_score(data, cluster_labels))

#labels.append(k)

fig, ax = plt.subplots(1, 2, figsize = (10,3))

fig.tight_layout(w_pad = 4)

# Elbow method

ax[0].plot(means, inertias)

ax[0].set_title('Elbow Method')

ax[0].set_xlabel('Values of K')

#plt.xticks(np.arange(1, k, 1))

ax[0].set_ylabel('Sum squared Error')

plt.grid(True)

# Silhouette

ax[1].plot(means, silhouette_avg , 'bv-', color = 'green')

ax[1].set_title('Silhouette Score')

ax[1].set_xlabel('Values of K')

ax[1].set_ylabel('Score')

plt.grid(True)

plt.show()

optimise_kmeans(vec, 10)

100%|████████████████████████████████████████████████████████████████████████████████████| 9/9 [01:05<00:00, 7.26s/it]

- It appears that the “elbow” point isn’t that clear to find, so we can’t define a good number for K.

- With the help of silhouette score, we can see the score drastically decreasing from cluster 2 to 3, peaking at cluster 4 and getting chaotic after it, looks like k-means is struggling to find an optimal score from past cluster 4.

- That said, i will be taking k = 4.

kmeans = KMeans(init = 'random',

n_clusters = 4,

n_init = 10,

random_state = 42)

kmeans.fit(vec)

KMeans(init='random', n_clusters=4, random_state=42)

Some insights about the data

news['clusters'] = kmeans.labels_

words = vectorizer.get_feature_names_out()[0:10]

words

array(['aa', 'aaa', 'aaron', 'ab', 'abandon', 'abandoned', 'abbey', 'abc',

'abiding', 'ability'], dtype=object)

# Greater TFIDF values

review_groups = news.clusters.value_counts()

review_groups

3 3007

2 781

0 700

1 538

Name: clusters, dtype: int64

Checking clusters

words = vectorizer.get_feature_names_out()

# 14 most common words from each group

common_words = kmeans.cluster_centers_.argsort()[:,-1:-15:-1]

for num, centroid in enumerate(common_words):

print(str(num) + " : " + ', '.join(words[word] for word in centroid))

0 : game, team, player, year, season, play, hockey, win, last, league, fan, good, would, think

1 : god, jesus, christian, one, would, people, bible, say, believe, church, faith, belief, know, think

2 : window, file, thanks, program, driver, anyone, card, know, please, email, use, graphic, image, using

3 : would, people, one, dont, think, like, get, know, right, gun, time, say, government, state

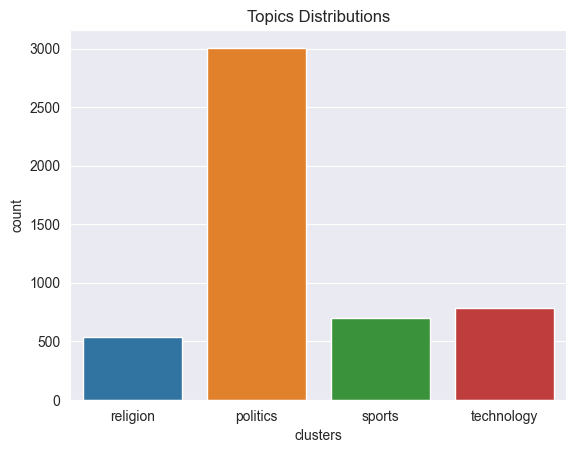

Renaming clusters names

- Cluster 0 seem to be related with sports.

- Cluster 1 seem to contain news about religion.

- Cluster 2 seem related to technology in general.

- Cluster 3 is related to politics.

# Renaming clusters

def cluster_names(cluster):

if cluster == 0:

return 'sports'

elif cluster == 1:

return "religion"

elif cluster == 2:

return 'technology'

elif cluster == 3:

return 'politics'

news.clusters = news.clusters.apply(cluster_names)

# show random samples of each group

def sample_reviews(data, amount = 1):

print('')

for i in data.clusters.unique():

print(f'cluster: {i}\n')

for j in range(0, amount):

print(data['content'][data['clusters'] == i].sample(1))

print('')

print(f"{'-' * 90}")

sample_reviews(news, 1)

cluster: religion

721 (Dean and I write lots and lots about absolute...

Name: content, dtype: object

------------------------------------------------------------------------------------------

cluster: politics

1576 %>I dunno, Lemieux? Hmmm...sounds like he\n%>...

Name: content, dtype: object

------------------------------------------------------------------------------------------

cluster: sports

4426 \nPeople are seeming to be less concerned abou...

Name: content, dtype: object

------------------------------------------------------------------------------------------

cluster: technology

185 We have been using Iterated Systems compressio...

Name: content, dtype: object

------------------------------------------------------------------------------------------

sns.countplot(x = news.clusters)

plt.title('Topics Distributions')

plt.show()

Number of words

Counting the number of words for each news.

# num words

pd.set_option('max_colwidth', 100)

news['num_words'] = news["filtered_content"].apply(lambda x: x.split()).apply(len)

news.sample(2)

| content | filtered_content | clusters | num_words | |

|---|---|---|---|---|

| 718 | The most ridiculous example of VR-exploitation I've seen so far is the\n"Virtual Reality Clothin... | the ridiculous example vrexploitation ive seen far virtual reality clothing company recently ope... | politics | 36 |

| 2724 | Please reply via EMail...\n\nWhen I use the terminal software for Windows such as TERMINAL.EXE o... | please reply via email when i use terminal software window terminalexe crossttalk doesnt use who... | technology | 46 |

Number of unique words

# number of unique words

news['num_vocab'] = news['filtered_content'].apply(lambda x: x.split()).apply(set).apply(len)

news.sample(2)

| content | filtered_content | clusters | num_words | num_vocab | |

|---|---|---|---|---|---|

| 3911 | \nIt is more appropriate to address netters with their names as they appear in\ntheir signatures... | it appropriate address netters name appear signature i failed since bother sign posting not poli... | politics | 81 | 74 |

| 3685 | Hi, \n\nI have a simple question. Is it possible to create a OVERLAPPED THICKFRAME\nwindow witho... | hi i simple question is possible create overlapped thickframe window without title bar ie wsover... | technology | 89 | 60 |

Lexical Diversity

- Lexical diversity can help us understand how complex a text is.

- Texts that are lexically diverse use a wide range of vocabulary, avoid repetition, use precise language and tend to use synonyms to express ideas.

news['lexical_div'] = news['num_words'] / news['num_vocab']

news.sample(2)

| content | filtered_content | clusters | num_words | num_vocab | lexical_div | |

|---|---|---|---|---|---|---|

| 2082 | Hello, | hello | politics | 1 | 1 | 1.000000 |

| 1328 | \n\nAs to what that headpiece is....\n\n(by chort@crl.nmsu.edu)\n\nSOURCE: AP NEWSWIRE\n\nThe Va... | a headpiece chortcrlnmsuedu source ap newswire the vatican home of genetic misfit michael a gill... | politics | 161 | 127 | 1.267717 |

Average word length

news["num_char"]=news["filtered_content"].str.len()

news['avg_word_length'] = news['num_char'] / news['num_words']

news.sample(2)

| content | filtered_content | clusters | num_words | num_vocab | lexical_div | num_char | avg_word_length | |

|---|---|---|---|---|---|---|---|---|

| 4753 | HHHHEEEELLLLPPPP Meeeeeee!\n\n\tI installed a 256 color svga driver for my windows last week. ... | hhhheeeellllpppp meeeeeee i installed color svga driver window last week this driver downloaded ... | technology | 77 | 53 | 1.452830 | 534 | 6.935065 |

| 2879 | {Dan Johnson asked for evidence that the most effective abuse \nrecovery programs involve meetin... | dan johnson asked evidence effective abuse recovery program involve meeting people spiritual nee... | politics | 38 | 29 | 1.310345 | 276 | 7.263158 |

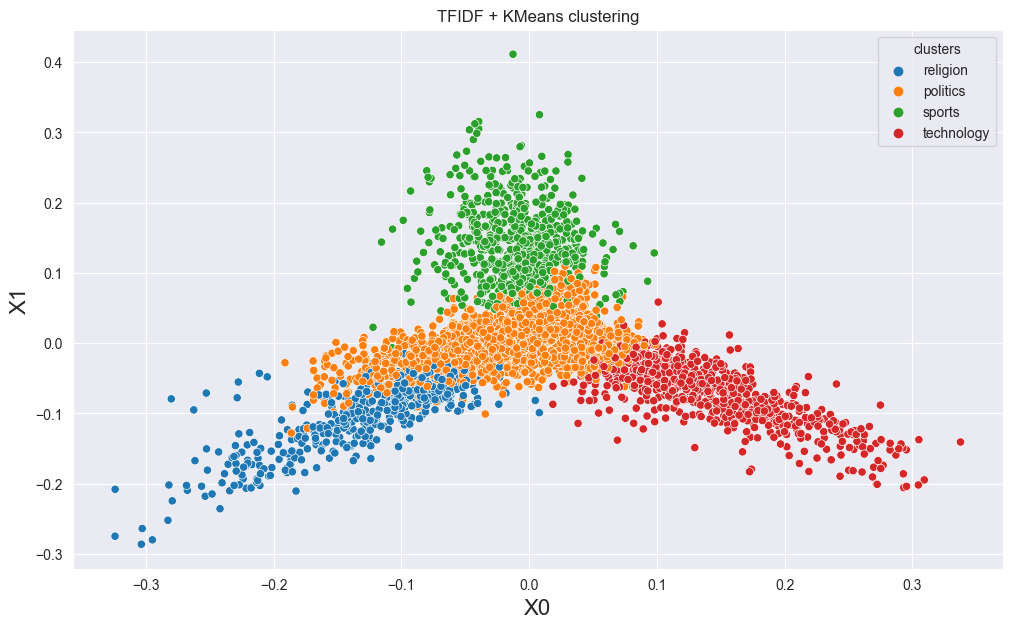

Applying PCA (Principal Component Analysis)

- PCA helps us to visualize the clustered data.

- As the name suggests, it reduces dimensionality of data points leaving only the principal components to be able to create a scatter plot of the data.

- It's useful for checking if we chose a optimal number for K at the clusterization step.

centroids = kmeans.cluster_centers_.argsort()[:, ::-1]

# PCA Decomposition

pca = PCA(n_components = 2, random_state = 42)

pca_vecs = pca.fit_transform(vec.toarray())

x0 = pca_vecs[:, 0]

x1 = pca_vecs[:, 1]

news['x0'] = x0

news['x1'] = x1

import seaborn as sns

plt.figure(figsize = (12, 7))

plt.title("TFIDF + KMeans clustering")

plt.xlabel("X0", fontdict = {'fontsize': 16})

plt.ylabel("X1", fontdict = {'fontsize': 16})

sns.scatterplot(data = news, x = 'x0', y = 'x1', hue = 'clusters', palette = 'tab10')

plt.show()

An interactive View

In this plot you can hover inside the chart and choose the point you want to check on. Hover over them and check if the news are related to its assigned cluster.

# transforming the words vocabulary into a dataframe

clusters = vectorizer.vocabulary_

data = {'Words': list(clusters.keys()),

'Count': list(clusters.values())}

df2 = pd.DataFrame(data)

# Visualizing groups

alt.Chart(news.sample(4000)).mark_circle(

size = 100

).encode(

x = 'x0',

y = 'x1',

color = 'clusters:N',

tooltip = 'content'

).properties(

width=750,

height=500

).interactive()

The End!

Please feel free to contact me if i did something wrong.

Full code HERE